Things don't always work out as we planned.

For anyone working in IT I imagine that this is one of the least ground-breaking statements they could read. Yet it is so easy to forget, and we still find ourselves caught out when we have established carefully laid plans and they start to struggle when faced with grim reality.

The value of "Responding to change over following a plan" is one of the strongest, and most important in the Agile manifesto. It is one that runs most contrary to the approaches that went before and really defines the concept of agility. Often the difference between success and failure depends upon how we adapt to situations as they develop and having that adaptability baked into the principles of our approach to software development is invaluable. The processes that have grown to be ubiquitous with agility - the use of sprint iterations, prioritisation of work for each iteration, backlog grooming to constantly review changing priorities and delivering small pieces of working software - all help to support the acceptance and minimise the disruption of change.

Being responsive to change, however, is not just for our product requirements. Even when striving to be accepting of change in requirements we can still suffer if we impose inflexibility in the development of our testing infrastructure and supporting tools. Test automation in particular is an area where it is important to mirror the flexibility that is adopted in developing the software. The principles to "harness change for our customers' competetive advantage" is as important in automation as it is in product development, if we're to avoid hitting problems when the software changes under our feet.

Not Set in Stone

A situation that I encountered earlier this year whilst working at RainStor provided a great demonstration of how not taking a flexible approach to test automation can lead to trouble.

My team had recently implemented a new API to support some new product capabilities. As part of delivering the initial stories there was a lot of work involved in supporting the new interface. The early stories were potentially simple to test, involving fixed format JSON strings being returned by the early API. This led me to suggest whole string comparison as a valid approach to deliver an initial test automation structure that was good enough to test these initial stories. We had similar structures elsewhere in the automation whereby whole strings returned from a process were compared after masking variable content, and therefore the implementation was relatively much simpler to deliver than a richer parsing approach. This would provide a solution suitable for adding checks covering the scope of the initial work, allowing us to focus on the important back end installation and configuration of the new service. The approach worked well for the early stories, and put us in a good starting position for building further stories.

Over the course of the next sprint, however, the interface increased in complexity with the result that the strings returned became richer and more variable in sorting and content. This made a full string comparison approach far less effective as the string format was unpredictable. On returning to the office from some time away it was clear that the team working on the automation had started to struggle in my absence. The individuals working on the stories were putting in a valiant effort in supportchanging new demands, but the approach I'd originally suggested was clearly no longer the most appropriate one. What was also apparent was that the team were treating my original recommendation as being "set in stone" - an inflexible plan, and were therefore not considering whether an alternative approach might be more suitable.

What went wrong and why?

This was a valuable lesson for me. I had not been at all clear enough that my initial idea was just that, a suitable starting point. It was susceptible to change in the face of new behaviours. In hindsight I'd clearly given the impression that I'd established a concrete plan for automation and the team were attempting to work to it, even in the face of difficulty. In fact my intention had been to deliver some value early and provide a 'good enough' automation platform. There was absolutely no reason why this should not be altered, augmented or refactored appropriately as the interface developed.

To tackle the situation I collaborated with one of our developers to provide a simple JSON string parser in Python to allow tests to pick specific values from the string, against which a range comparison or other checks could be applied. I typically learn new technologies faster when presented with a starting point than having to research from scratch so, as I didn't know python when I started, working with a developer to deliver a simple starting point saved me hours here. Within a couple of hours we had a new test fixture which provided a more surgical means of parsing, value retrieval and range comparison from the strings. The original mechanism was still necessary for the API interaction and string retrieval. This new fixture was very quickly adopted into the existing automated tests and the stories delivered successfully thanks to an impressive effort by the team to turn them around.

Familiar terms, newly applied

We have a wealth of principles and terms to describe the process of developing through a series of incremental stages of valuable working software. This process of delivering can place pressure on automation development. Testers working in agile teams are exposed to working software to test far earlier than in longer cycle approaches, where a test automation platform could be planned and implemented whilst waiting for the first testing stage to start.

Just as we can't expect to deliver the complete finished product out of the first sprint, we shouldn't necessarily expect to deliver the complete automation solution to support an entire new development in the first sprint either. The trick is to ensure that we prioritise appropriately to provide the minimum capabilities necessary at the time they are needed. We can apply some familiar concepts to the process of automation tool development that help to better support a growing product.

- Vertical Slicing - In my example the initial automation was intended as a Vertical Slice. It was a starting point to allow us to deliver testing of the initial story and prepare us for further development - to be built upon to support subsequent requirements. As with any incremental process, if at some point during automation development it is discovered that the solution needs restructuring/refactoring or even rewriting in order to support the next stage of development, then this is what needs to be done.

-

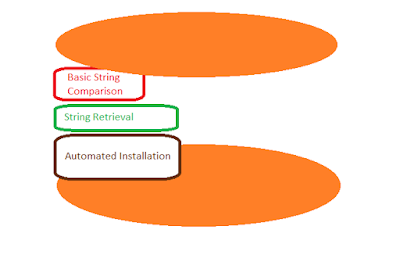

The Hamburger Method - For anyone not familiar with the idea, Gojko Adzic does a good job of summarising the "Hamburger Method" here, which is a neat way of approaching incremental delivery/vertical slicing that is particularly applicable to automation. In the approach we consider the layers or steps of a process as layers within the Hamburger. Although we require a complete hamburger to deliver value, not every layer needs to be complete and each layer represents incremental value which can be progressively developed to extend the maturities and capability of the product. Taking the example above, clearly the basic string comparison was not the most complete solution to the testing problem. It did, however, deliver sufficient value to complete the first sprint.

We then needed to review and build out further capabilities to meet the extended needs of the API. This didn't just include proper parsing of the JSON but also parameterised string retrieval, security configuration on the back end and the ability to POST to as well as GET from the API.

-

Minimum Viable Product - The concept of a minimum viable product is one that allows a process of learning from the customers. I like this definition from Eric Ries

"the minimum viable product is that version of a new product which allows a team to collect the maximum amount of validated learning about customers with the least effort."

It's a concept that doesn't obviously map to automation development, however essentially when developing test architectures then the people who need to use these are our customers. When developing automation capabilities, considering an MVP is a valid step. Sometimes, as in my situation above, we don't always know how we're going to want to test something. It is only in having an initial automation framework that we can elicit learning and make decisions about the best approach to take. By delivering the simple string comparison we learned more about how to test the interface and used this knowledge to build our capability in the python module, which grew over time to include more complex features such as different comparison methods and indexing structures to retrieve values from the strings.

In another example where we recently implemented a web interface (rather fortunate timing after an 8 year hiatus, given my later departure and subsequent role in a primarily web-based organisation) our "Minimum Viable Automation" was a simple Selenium WebDriver page object structure driven through Junit tests of the initial pages. Once we'd established that we learned about how we wanted to test, and went on in subsequent sprints to integrate a Cucumber layer on top as we decided that it was the best mechanism for us given the interface and nature of further stories.

Being Prepared

Whilst accepting change is a core concept in agile approaches, Interestingly not all organisations or teams working with agile methods apply the same mindset to their supporting their structures and tools. Having autonomous self managing teams can be a daunting prospect for companies more accustomed to a command control structure and high levels of advanced planning. The temptation in this situation is to try to bring as many of the supporting tools and processes as possible into a centralised group to maintain control and provide the illusion of efficiency. This is an understandable inclination, and there are certainly areas of supporting infrastructure that benefit from being managed in one place, such as hardware/cloud provisioning for example. I don't believe that this is the correct approach for test automation. Attempting to solve the problems of different teams with a single central solution takes autonomy away from teams by removing their ability to solve their own problems and find out what works best on their products. This is a big efficiency drain on capable teams. In the example above, our ability to alter our approach was instrumental in successfully recovering when the original approach had faltered.

My personal approach to tool creation/use is simple - focus on preparation over planning, and put in place what you need as you go. Planning implies knowing, or thinking that you know, what will happen in advance. Planning is essential in many disciplines, however in an unpredictable work environment placing too much emphasis on planning can be dangerous. Unexpected situations undermine plans and leave folks confused about how to progress. Preparation is about being in a position to adapt effectively to changing situations. It is about having the right knowledge and skills to respond to change and adapt accordingly, for example through having a 'Square Shaped Team` with a breadth and depth of relevant skills to take on emergent tasks. By all means centralise a body of knowledge of a range of tools to assist teams in their selection of tools or techniques, but leave the teams to decide which is best for them. In the web testing example above, whilst we'd not tested a web interface before, through maintaining an up to date knowledge in house of technologies and approaches that others were using we were still well prepared to start the process of creating a suitable test architecture when the need arose.

By building a team with a range of knowledge and capabilities we are preparing ourselves for the tasks ahead. Using approaches like vertical slicing, the hamburger method and minimum viable product in the creation of our tools allows to implement that ability in a manner appropriate for the fluidity of agile product developments. Or to put it more simply, by accepting that change happens, and using tried and tested approaches to incrementally deliver value in the face of change, we can ensure that we are prepared when it comes.

References

- Minimum Viable Product - Eric Ries http://www.startuplessonslearned.com/2009/08/minimum-viable-product-guide.html

- The Hamburger Method - Gojko Adzic "http://gojko.net/2012/01/23/splitting-user-stories-the-hamburger-method"

Nice Post! It is really interesting to read from the beginning & I would like to share your blog to my circles, keep your blog as updated.

Regards,

Post a Comment